Table of Contents

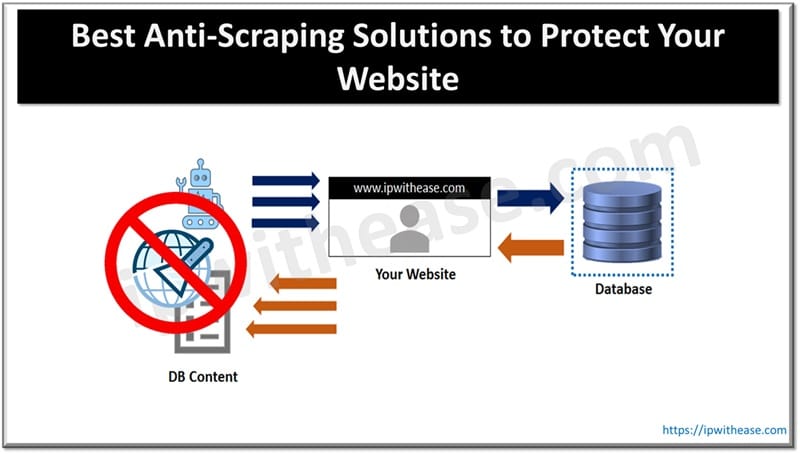

Unfortunately for honest website hosts, scraping is a persistent, well-organized threat. Modern scraping operations often erode revenue, distort analytics, and ruin user experience. According to Imperva’s much-cited “Bad Bot Report”, bad bots account for an alarming 37% of all internet traffic.

The financial impact of successful bad bots can be huge: millions in lost sales, skewed pricing, and damaged SEO results. But organizations – from e-commerce and travel sites to marketplace giants and media companies – can keep pace with trusted anti-scraping solutions. These detect and mitigate automated scraping at huge scale without compromising legitimate users’ experiences.

What is Anti-Scraping?

Anti-scraping refers to the mix of technologies, policies, and operational tactics used to identify and stop automated attempts to extract content from websites or APIs.

Traditional defenses like CAPTCHAs and rate limiting once worked well enough, but scrapers have evolved. They now use tools like residential proxies, headless browsers, AI-assisted solvers, and replay scripts designed to mimic human behaviour.

Advanced anti-scraping platforms use a blend of client-side and server-side data, behavioral analytics, device fingerprinting, and even deception techniques. By combining these signals in real time, they can distinguish legitimate users from automated traffic and block access to high-value areas – like pricing pages, search endpoints, and sitemaps – before real damage occurs.

Choosing the Right Anti-scraping Solution

Picking the right tool isn’t just about locking things down – look for the right balance between security, usability, and business goals. A setup that’s too strict can frustrate genuine users; one that’s too loose leaves you open to attack. When comparing options, it helps to think about how they’ll fit into your day-to-day operations:

- Detection depth: Go for platforms that look at several layers of data (things like TLS fingerprints, client integrity, and behaviour patterns), rather than relying only on IP reputation or simple JavaScript checks. The broader the view, the harder it is for scrapers to hide.

- Channel coverage: Your website isn’t the only target anymore. Make sure your defenses also cover APIs, mobile apps, and any data feeds you share. Those are now more often the first entry points attackers go after.

- Deployment flexibility: Every organization runs differently. Some prefer edge or CDN-level protection, while others need reverse proxies or in-app SDKs.

- Enforcement controls: It’s rarely smart to block traffic outright on the first sign of trouble. Risk-based enforcement – throttling suspicious traffic or prompting additional checks – helps avoid frustrating real customers while still keeping bots in check.

- Analytics and reporting: Look for tools that show more than just “blocked requests”: visual heatmaps, clear dashboards, and metrics like price scraping and content theft rates.

- Operational model: Managed services offer quicker setup and expert tuning, while self-serve platforms give more granular control to in-house teams.

- Bypass resistance: Scrapers adapt quickly. Frequent signal rotation, replay resistance, and deceptive tactics help you stay ahead.

Top 7 Providers

DataDome

DataDome offers rapid deployment and strong protection through both client- and server-side signals. It frequently rotates obfuscation methods, supports extensive SDKs, and provides detailed analytics via bot reports and heatmaps. It’s suitable for teams seeking powerful protection with minimal maintenance.

Kasada

Kasada makes scraping financially and technically unsustainable for attackers. It manages this by attacking the economics of automation. In other words, it’s mutating its client-side defenses so frequently that bots have to solve new challenges every time they connect – and most attackers simply give up. Kasada’s resistance to replay or solver tools makes it a reliable choice for organizations facing persistent, adaptive threats.

HUMAN Security (Bot Defender)

HUMAN is for large businesses, combining bot mitigation with shared threat intelligence and fraud prevention. Its ability to integrate with marketing fraud detection makes it a solid option for companies seeking a unified fraud protection framework.

Netacea (Intent Analytics)

Netacea’s standout feature is its behavioral intent analysis, which focuses on how users behave rather than what devices they use. This is effective for retailers and marketplaces that need fine-grained insights to spot subtle scraping activity.

Reblaze Bot Management

Reblaze delivers a complete reverse-proxy solution that includes both bot management and WAF features. It’s a good fit for mid-sized businesses looking for simplicity and managed services that take care of ongoing updates and tuning.

F5 Distributed Cloud Bot Defense

This option works seamlessly across web apps and APIs. Leveraging F5’s expertise in intent analysis, it’s best suited for large enterprises with complex or distributed infrastructures.

Cequence Bot Defense

Cequence focuses on API discovery and protection; it’s a good choice for companies built around API-driven architectures. The flexible deployment options (via reverse proxy or sidecar) are a plus, though its web-level JavaScript coverage can vary by setup.

Best Practices for Anti-scraping

Anti-scraping is an ongoing process that requires continuous refinement and monitoring. Here are some best practices:

Placement and Coverage

Start by protecting your most sensitive endpoints: login and search pages, pricing and inventory data, sitemaps, APIs, and high-value landing pages. For mobile apps, integrate SDK signals like device integrity and jailbreak/root detection, and use API schema allowlists to prevent unauthorized interactions.

Invisible vs. Explicit Friction

Try to make your defenses invisible to real, human users. Behavioral scoring and risk-based throttling can quietly filter bots without interrupting the experience. Save CAPTCHAs or other explicit challenges for high-risk scenarios. A gradual enforcement model – observe, throttle, then block – works best.

Challenge and Signal Design

Scrapers evolve quickly, so your defenses need to as well. Make sure to rotate client integrity checks and obfuscation layers, use canary endpoints to trap automated tools, and use multiple signals (fingerprinting, TLS/JA3/JA4 patterns, timing, and behavior) to boost accuracy.

Testing and Monitoring

When introducing new anti-scraping rules, start in shadow mode so you can see how your policies affect real traffic. Watch more than just the number of blocks – keep an eye on things like failed logins, conversion rates, bounce rates, SEO crawler activity, and accessibility for users with disabilities. These details tell you whether your defenses are working or just getting in the way.

Watch business metrics too. If you’re trying to prevent content theft, price manipulation, or cart scraping, you’ll want to measure how those numbers change over time. That’s how you know if your investment is paying off.

Updating and Rotation

Scrapers don’t stand still, and neither can your defenses. For industries at higher risk, it’s smart to rotate deception assets and refresh detection signals every week or so. Also double-check your allowlists regularly. Trusted partners and legitimate bots (like Googlebot) shouldn’t get caught in the crossfire.

Running red-team exercises using real-world scraping tools can help you spot weak points you might have missed. And after any incident, take time to review what happened and what worked – those lessons are the best fuel for improving your setup.

Comparison Table

| Vendor | Detection Approach | Channels Covered | Deployment Options | Policy/Enforcement | Analytics & BI | Ops Model | Typical Strengths | Considerations |

|---|---|---|---|---|---|---|---|---|

| DataDome | Client+server ML, device fingerprint, deception | Web, API, Mobile | Edge/CDN, reverse proxy, in-app SDK | Score, throttle, tarpitting, step-up | Detailed bot reports, route heatmaps | Managed + self-serve | Fast time-to-value, low FP | Licensing by traffic; JS/SDK upkeep |

| Kasada | Signal mutation, anti-replay economics | Web, API | Edge/CDN, proxy | Block, slow, step-up | Attack forensics | Managed emphasis | Bypass resistance | Integration depth varies by stack |

| HUMAN | ML + intel from broader fraud graph | Web, API, Mobile | Edge, proxy, agent | Score, rules, step-up | Business-level insights | Managed enterprise | Multi-vector protection | Enterprise complexity |

| Netacea | Behavioral intent analytics | Web, API | Sensor + server | Dynamic throttling | Route-level KPIs | Adaptive tuning | Retail/launch defense | Behavior modeling ramp-up |

| Reblaze | Bot mgmt + WAF stack | Web, API | Managed reverse proxy | Rules + ML | Ops dashboards | Managed | All-in-one simplicity | Vendor-managed topology |

| F5 DC Bot Defense | ML + F5 ecosystem | Web, API, Mobile | F5 edge/cloud | Score, policy | Estate-wide | Managed/partner | F5 synergy, large estates | Best with F5 footprint |

| Cequence | API discovery + defense | API, Web | Reverse proxy, sidecar | Risk-based | API posture mgmt | Managed + self | API-first orgs | Web JS depth may vary |

Last Words

Modern scraping operations are stronger than ever, and your defenses need to be equally agile. The effective anti-scraping programs outlined above combine multi-signal detection, rapid signal rotation, and risk-based enforcement to protect revenue and SEO without degrading user experience.

Among the leading solutions, DataDome offers one of the best balances between protection strength and operational simplicity, making it a strong choice for teams that need quick, effective coverage.

ABOUT THE AUTHOR

IPwithease is aimed at sharing knowledge across varied domains like Network, Security, Virtualization, Software, Wireless, etc.