Table of Contents

Artificial intelligence (AI) is changing the traditional way the world works so far and it has revolutionized working and living and has a tremendous impact on our lives. However, with a powerful AI comes a big responsibility and need for its usage in a sensible manner. The ethics and conscientious development and deployment and its management is important to ensure that we should be able to mitigate its potential harm.

In today’s topic we will learn about responsible AI, its key principles and usage within responsible boundaries.

What is Responsible AI?

Responsible AI is a diverse approach towards development, deployment and management of artificial intelligent systems to ensure our interaction with it remains within ethical and permissible boundaries while remaining transparent and aligned with society values. Responsible AI is a set of principles and practices which aim to ensure artificial intelligent systems are fair, understandable, robust and secure. The usage of AI in a responsible manner starts from data scientists to its users – they need to adopt a responsible approach towards careful evaluation and mitigation of risks which are related to its ethical usage, social and legal implications.

Core Principles of Responsible AI

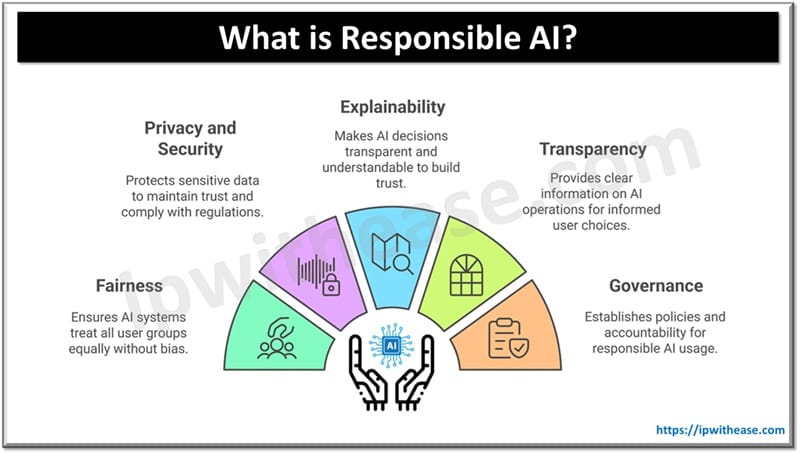

Let’s look at 5 core principles of responsible AI usage:

Fairness

This principle is related to how an AI system impacts different user groups such as by their gender, ethnicity, or any other demographic details. The ultimate goal is to ensure that AI systems do not create a biased outcome or reinforce unfair bias and all user groups are treated equally irrespective of diverse demographics.

Privacy and Security

AI systems must protect sensitive data from unwarranted and undesired exposure to ensure security and privacy which is essential to maintain trust of users and comply with legal and regulatory requirements. Recent AI EU act launch and enforcement talks about various controls and measures depending on the usage of AI for high risk, regulated, GenAI based and unregulated systems.

Explainability

This is the third core principle of responsible AI which means we need to evaluate the output produced by AI systems. It is about making decision actions transparent and in human understandable format which is important to build trust and establish accountability especially in areas where stakes are high such as finance, healthcare industry etc.

Transparency

Communicating information about AI systems so that users can make informed and sensible choices about system usage. Transparency involves calling out how an AI system is working, how it is gathering the inputs and producing the output. Transparency helps end users to determine and take AI system output with a grain of salt and understand its full implications which help in building user trust and gaining its consent.

Governance

Governance is overall control and monitoring of AI systems usage with an organization. This includes establishment of clear policies, procedures and accountability to set guidelines and boundaries on AI system usage in an acceptable manner.

How to Build Responsible AI?

It is crucial to understand how to build a responsible AI and garner its maximum benefits while minimizing the negatives.

- Potential Harm Identification – Recognize and understand the risks and threats associated with generative AI applications and as a proactive measure see how these risks can be mitigated and fixed. The issues related to invasion of privacy, bias amplification, unfair treatment to a certain group of people and other ethical concerns need to be dealt with while considering usage of AI systems.

- Mitigation of Harm – Measuring the presence of potential harm and working on strategies to reduce their impact and presence. Adjusting training data sets, reconfiguring AI models, additional filters or guardrails can help to minimize the negative issues.

- Operating Solution Responsibly – The final aspect is to operate and maintain the AI solution in a responsible way. A well-defined plan of deployment will need to consider all aspects of its usage in a responsible manner. Ongoing monitoring, maintenance. Logging, recording, updating AI systems is crucial.

ABOUT THE AUTHOR

You can learn more about her on her linkedin profile – Rashmi Bhardwaj