Table of Contents

Cybersecurity faces a fundamental asymmetry: attackers operate with algorithmic speed, while defenders are limited by human cognitive capacity. This disparity has triggered a collapse in SOC resilience, where 70% of teams report emotional overwhelm and nearly half of all alerts go unaddressed daily. The “Collect and Detect” model, centralized logging and manual triage, has reached its thermodynamic limit.

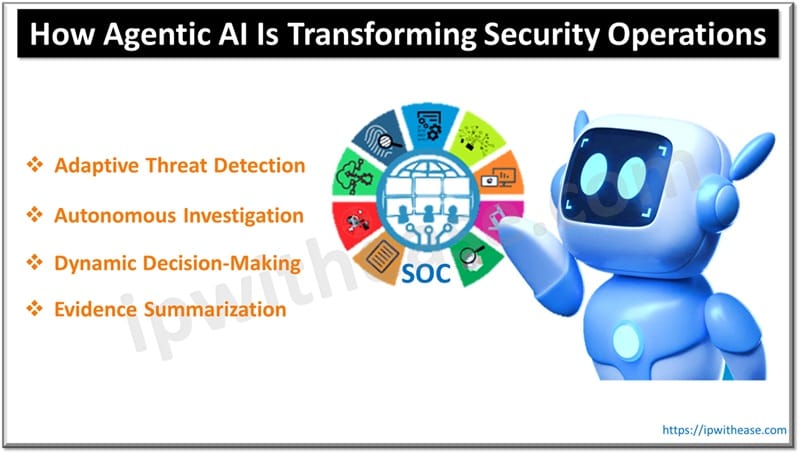

The industry’s answer is the Agentic SOC. This paradigm shifts operations from reactive, rule-based automation to proactive, goal-oriented autonomy. Unlike passive “co-pilots,” Agentic AI systems reason adaptively, use tools autonomously, and make dynamic decisions. By analyzing modern autonomous architectures, this report explores how elevating analysts from data processors to supervisors of synthetic workforces restores the defender’s strategic advantage.

The Entropy of the Modern SOC

For two decades, security doctrine relied on visibility. The logic was that ingesting enough telemetry and writing enough rules would inevitably catch every threat. But the complexity of cloud-native infrastructures has shattered this assumption. The sheer volume of data now outpaces human analysis.

The operational reality is bleak. A typical enterprise SOC is bombarded with over 3,800 alerts daily. Thoroughly analyzing just one, correlating identity, threat intel, and asset value, takes 10 to 30 minutes. The arithmetic simply doesn’t work; there aren’t enough hours in the day. Consequently, the industry has normalized failure: 50% of security alerts are ignored daily. These aren’t just low-priority notifications; they are potential breach precursors, deliberately overlooked due to bandwidth constraints.

The human toll is equally severe. Alert fatigue has degraded into a retention crisis. When analysts face thousands of stimuli daily, mostly false positives, they habituate, subconsciously tuning out noise to preserve sanity. Research shows 70% of SOC teams feel emotionally overwhelmed, and 43% admit to turning off alerts to cope. This environment functions as a “turnover engine,” burning out junior analysts in less than two years and stripping SOCs of institutional memory.

Defining the Agentic AI SOC

From Automation to Autonomy

The industry’s historical band-aid, SOAR (Security Orchestration, Automation, and Response), failed to solve this because it is deterministic. It relies on brittle “if-this-then-that” playbooks. The Agentic SOC replaces these rigid scripts with goals.

Agentic AI is defined by Adaptive Reasoning; the ability to formulate a hypothesis and independently determine the best steps to verify it. Unlike a “Co-pilot”, an intelligent assistant that waits for commands, an Agent represents a synthetic team member with initiative. It doesn’t wait to be asked. When it detects an anomaly, it starts the investigation, pursuing multiple leads simultaneously.

Core Capabilities

- Adaptive Threat Detection: Agents move beyond static signatures. They learn historical baselines and flag behavioral deviations, like a legitimate admin accessing a server at an impossible speed, that rule-based systems miss.

- Autonomous Investigation: This is the force multiplier. Upon detecting a signal, the agent queries endpoints, cross-references IP reputations, and correlates activity across the network. It investigates the 1st alert and the 3,000th with equal rigor, eliminating the “unaddressed gap.”

- Dynamic Decision-Making: Agents can execute low-risk actions based on confidence thresholds. If an agent identifies ransomware with 99% certainty, it isolates the host immediately, outpacing any human response.

- Evidence Summarization: Agents synthesize technical data into narrative case files. Instead of a log dump, the analyst receives a coherent summary: “Suspicious login detected. User verified via MFA. Activity matches scheduled change ticket #123. Recommended action: Close as False Positive.”

The Architecture of Autonomous Security

Implementing an Agentic SOC requires more than software; it demands a data architecture capable of “thought speed.”

The AI Supervisor Model

Leading solutions like Autonomous Security Operations(ASO) align with the agentic SOC vision by combining agentic AI with structured, engineering-led SecOps workflows. Built on planetary-scale security operations platforms and enriched through unified data fabrics, these systems improve detection fidelity, drive deeper investigations, and provide consistent, context-aware decision support.

Rather than pursuing fully autonomous action immediately, modern architectures utilize an AI Supervisor model. In this hierarchy, agents automate repetitive tasks, explore investigative paths, and summarize findings, while human analysts guide decisions and maintain oversight. This enables organizations to progress from co-pilot assistance toward mature agentic SOC capabilities in a controlled, outcome-focused manner.

The Human-AI Partnership

The Agentic model is not about replacing analysts; it is about saving them. By offloading the repetitive “toil” of triage, AI liberates humans to focus on Threat Hunting and Detection Engineering.

Eminent Security leaders have noted that automated investigations are “more thorough and consistent than human-only efforts.” An agent follows procedure with 100% adherence, 24/7, without fatigue. This consistency provides peace of mind, the “night shift” is covered by an entity that never sleeps.

This dynamic acts as a force multiplier. A small team of five, supported by a workforce of agents, can achieve the coverage of fifty. Organizations can scale their defenses non-linearly, absorbing data explosions with compute power rather than headcount.

Building the Agentic SOC

Transitioning to autonomy is a journey, not a switch flip.

- The Intern Phase (Co-Pilot): Start by deploying AI assistants to help analysts write queries and summarize alerts. This builds trust and cleans up data hygiene.

- The Agentic Phase (Semi-Autonomy): Introduce agents for specific, low-risk tasks, such as phishing triage. Give them “read-only” access initially. If an agent identifies a phishing attempt with >95% confidence, allow it to delete the email autonomously.

- The Supervisor Phase (Full Integration): As trust matures, agents take over complex investigations and containment actions on non-critical assets. The SOC shifts to the Supervisor model, where humans govern the AI workforce.

Governance and Risk

As we adopt this model, we must secure the agents themselves. Non-Human Identities (NHIs), the API keys and tokens agents use, are a massive attack surface. If an adversary compromises an agent’s identity, they inherit its autonomy. Furthermore, organizations must guard against Shadow AI, ensuring no unsanctioned tools leak sensitive data.

Conclusion

The shift to the Agentic SOC is inevitable. The current model, pitting human cognitive speed against machine-speed attacks, is mathematically unsustainable. Solutions utilizing the AI Supervisor model demonstrate that by pairing human strategic reasoning with the infinite scalability of Agentic AI, we can move from reactive survival to proactive resilience. In this new era, success isn’t measured by how many alerts a SOC closes, but by how effectively it leverages its synthetic workforce to neutralize threats before they impact the business.

ABOUT THE AUTHOR

IPwithease is aimed at sharing knowledge across varied domains like Network, Security, Virtualization, Software, Wireless, etc.