Table of Contents

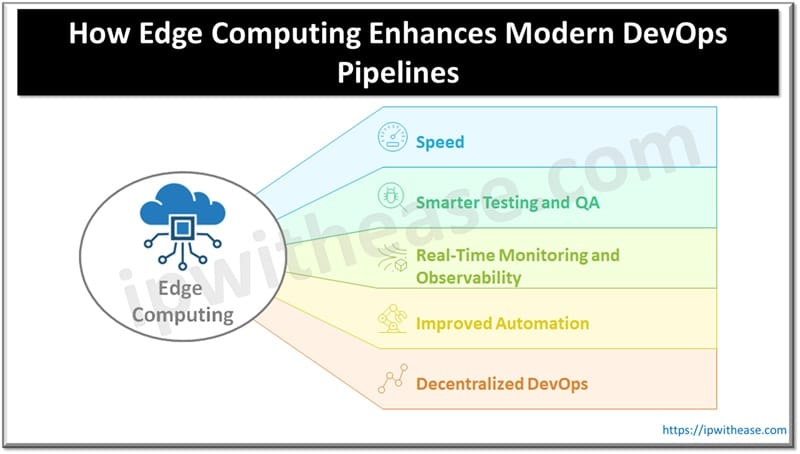

In the fast-changing world of software development and operations, there’s no doubt about it – speed, efficiency, and adaptability matter the most. Legacy cloud models have been a godsend for us, but with the proliferation of IoT devices, 5G networks, and real-time data processing requirements, DevOps professionals keep gravitating towards edge computing. It’s no longer a buzzword anymore. Edge computing has become a tangible, pragmatic reality that’s redefining DevOps, and in numerous ways, it’s enabling pipelines to be more intelligent, agile, and fault-tolerant.

Let’s take a closer look at how this shift is occurring and what it actually means for the day-to-day of DevOps today.

Understanding the Edge Computing: A Quick Refresher

Before going deep into details, let’s step back a bit. What is edge computing actually?

In a nutshell, edge computing involves handling data near where it is being generated, i.e., at the edge of the network. Rather than sending all the data off to a master cloud for processing, a portion of the work is being done locally at devices or at proximate edge servers. It reduces latency, conserves bandwidth, and enables applications to provide near real-time responses.

However, once you combine this method with DevOps, you start noticing an intriguing shift in the way pipelines work.

Speed: The Most Evident and Immediate Benefit

DevOps loves speed. You want your patches, features, fixes, upgrades to go fast but not at the cost of breaking things. Outdated methods, especially cloud-centralized processing methods, can slam on the brakes when your data has to travel vast distances or fight congestion across the network.

With edge computing entering the equation, latency falls sharply. You can test and deploy apps near the user or device, which means you can enjoy faster feedback loops. Your CI/CD pipeline is more agile, code can be deployed on real-world edge devices in real-time and your deploy cycles can decrease from hours to minutes.

Think of it this way: when latency isn’t a bottleneck, your DevOps team can move faster, respond quicker, and experiment more often without the usual lag in processing or decision-making.

Smarter Testing and QA at the Edge

Edge computing opens up new opportunities for testing. Instead of waiting for test data to travel across networks to a cloud-based QA environment, you can now test right where the action is.

Put yourself in the scenario of deploying firmware across thousands of deployed IoT devices. You can deploy releases on a small batch of edge nodes with an edge-first DevOps environment and test out how they’ll interact in real-world settings. Not only do you minimize your risk for a large-scale failure, but you also receive highly localized feedback with which you can tweak your release prior to going large scale.

In other words, your QA processes can be more adaptive, real-time, and informed in the

environments your applications truly exist within with edge computing.

Real-Time Monitoring and Observability

One of the more challenging aspects of running a modern DevOps pipeline is staying on top of what’s actually occurring once software is in production. That’s where edge computing comes into its own. You can now deploy monitoring and observability software on the edge devices or even servers such that they assess performance metrics in real-time and report them instantly. All this without having to wait for all of the data to be sent off to a master hub.

This is often powered by what’s known as edge cloud – a hybrid layer that blends the power of cloud computing with the proximity of edge locations. It brings cloud-like resources closer to the user or the device, enabling low-latency processing and centralized coordination without sacrificing responsiveness.

Improved Automation for Unpredictable Situations

DevOps is about automation – scripts, pipelines, triggers, alerts. But for apps running across clouds and edge, automation can’t be static. You can’t depend on constant connectivity, or all of the edge nodes being similar. And certainly can’t treat edge environments like typical servers.

Thus, new DevOps groups reevaluate their plays at automation. They use edge-cognizant tools that can summon updates, run scripts, even manage rollbacks with conditions at the very edge. These are smart enough to execute stand-alone with no cloud connection and resync once the connection comes back online.

Laying the Foundation of Decentralized DevOps

Edge computing doesn’t just enhance DevOps, it’s pushing it toward something fundamentally new. Instead of thinking of DevOps pipelines as centralized systems with centralized workflows, we’re now seeing the rise of decentralized DevOps.

Imagine a world where various aspects of your application can be developed, tested, deployed, and monitored across various parts or even separate edge nodes in parallel. You can take microservices a step further, not only architecturally but also operationally.

Wrapping It Up

If your edge computing has been an afterthought for your DevOps approach, it’s about time for reconsideration. Just as our virtual world is going into real-world devices, spaces, and time-critical use cases, edge-first thinking is becoming more significant.

Whether you’re designing smart cars or ultra-responsive web applications, introducing edge computing into your DevOps pipeline isn’t smart but the future.

And that future is already here.

ABOUT THE AUTHOR

IPwithease is aimed at sharing knowledge across varied domains like Network, Security, Virtualization, Software, Wireless, etc.