Introduction to SPOF in Distributed Systems

Technological expansions are making distributed systems more and more complex and their penetration is spreading. Distributed systems work together as group of computers but appear as single system to the end users. These systems have a shared state, operate concurrently, and fail independently without affecting the systems uptime. In traditional systems everything is stored on a single system whereas distributed systems allow users to access data from any systems running parallely. This is usually to achieve horizontal scalability.

In this article we will learn about how to avoid single points failure in distributed systems, strategies adopted, its advantages and limitations and so on,

Single Point of Failure(SPOF)

A SPOF (single point of failure) is any non-redundant system part whose failure could lead to failure of the entire system. A single point of failure is a major hindrance to the goal of high availability of an application, system or network. A single point of failure could be a design flaw, configuration or system implementation issue which poses potential risk as it could lead to a situation in which just one small fault can be the cause of system failure. Single point of failure is an undesirable situation for systems which demand high availability and reliability such as supply chain, network and software applications.

Causes of SPOF in Distributed Systems:

Single point of failures required to be identified when a system is architected before it can cause any harm. The best way to detect is to examine every component of the system and see what would happen if a component will fail.

There could be multiple reasons for failure in distributed systems which we discuss here in detail.

- Unreachability of Database – a service which needs to read configuration items from a non-replicated database and if the database is not reachable the service won’t start.

- Expired web certification – could lead to failure of not being able to open connection with the service.

- Unreliable Networks – when a client makes a remote network call, and sends a request to the server and waits to receive response from another end and it never comes. Slow networks or network latency are silent killers for distributed systems. The waiting time can lead to degradation of response which is difficult to debug.

- Slow processes – resource leaks are the main cause of slow processes. Memory leaks are well known source of leaks and manifests itself with steady increase in memory consumption and run time and garbage collection do not help if a reference object no longer required is kept somewhere, memory leak will keep on consuming the memory and operating system will start swapping memory pages to disk constantly to release any shred of memory. The constant paging and garbage collector will eat up CPU cycles and make the processes slow. Eventually when no physical memory is left and there is no more space to swap files, the process will not be able to allocate more memory and operations will fail.

- Unexpected load – each system has a limit to how much load it can take without the need for scaling. Depending on how load is increasing but sudden spike or unexpected load increase will not give you enough time to plan the scaling of service accordingly. There could be variety of reasons for rate and type of incoming requests change over time and suddenly:

- Requests might have seasonality depending on the hour of the day the service is going to be hit by users in different geographies.

- Some requests are more expensive as compared to others and abuse the system in a way which is not anticipated such as scrapers slurring in data from site at super human speed.

- Some requests could be malicious such as DDoS attacks which may try to saturate service bandwidth ,deny access to legitimate users.

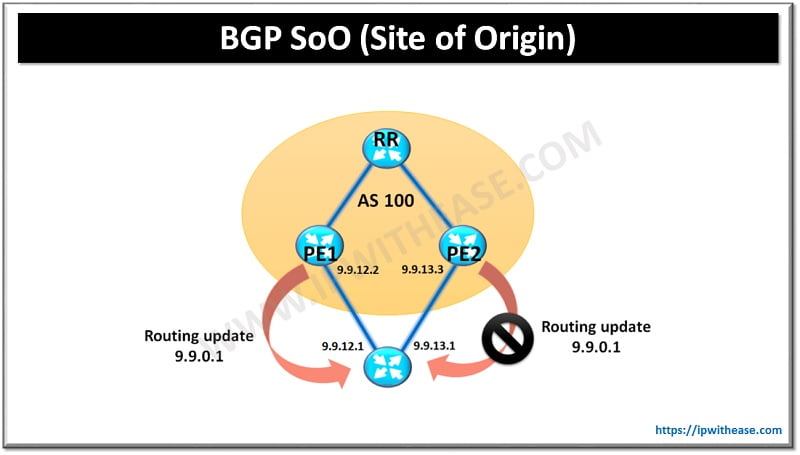

- Cascading failures – if a system has hundreds of processes , a small percentage failing won’t make much difference but in the event the effect is cascading when a major portion of an overall system fails. For example, if we have two replicas of databases to handle a set of transactions per second. If one replica becomes unavailable due to network fault or some other reason. The load balancer will detect the fault and remove it from the pool, now all transaction handling loads come up on an active replica node which becomes incapable of handling so many incoming requests and clients start experiencing failures and timeouts and eventually stop responding. Its failure detection by load balancer will cause it to be removed from pool, in the meantime if an earlier failed replica comes online and added to pool again it would be flooded with requests which will kill it and this feedback loop can repeat multiple times. Cascading failures are hard to stop once they start

Techniques to avoid single point of failure in distributed systems

There are a variety of best practices to mitigate failures such as circuit breakers, load shedding, rate limiting and bulkheads. Let’s look at them more in detail.

Circuit Breakers

To increase reliability in distributed systems circuit breakers can be used which can automatically block off access when the number of failed remote attempts exceed a certain threshold . The breaker stores the block , initializes various parameters and resets the breaker into its closed state.

Load Shedding

Load shedding is used to detect and fail gracefully when there is traffic congestion. When the service can’t handle incoming requests, it is a strategy used to identify which requests to be served to prevent large cascading issues across distributed systems. This is achieved by limiting the number of concurrent requests to the system, limiting waiting time etc.

Rate Limit

Rate limit technique is used by systems that expose endpoints which might suffer from overloading . client side need to obey rate limits set by server side so that all requests are processed properly

BulkHead

In Bulkhead’s technique, application is split up into multiple components and resources and isolated in such a way that failure of one component will not affect the other.

Continue Reading:

Dog: Distributed Firewall Management System

Network Operating System vs Distributed Operating System (NOS vs DOS)

ABOUT THE AUTHOR

You can learn more about her on her linkedin profile – Rashmi Bhardwaj