Table of Contents

Introduction

Proxies, VPNs, and scraping APIs are some of the main tools individuals and enterprises consider when privacy becomes a concern during web scraping. While this is a very reasonable approach, there seems to be a lot of confusion over the correct tool to use and which is most effective.

In this article, we will go over the distinctions between these tools and provide clear use cases for each in web scraping.

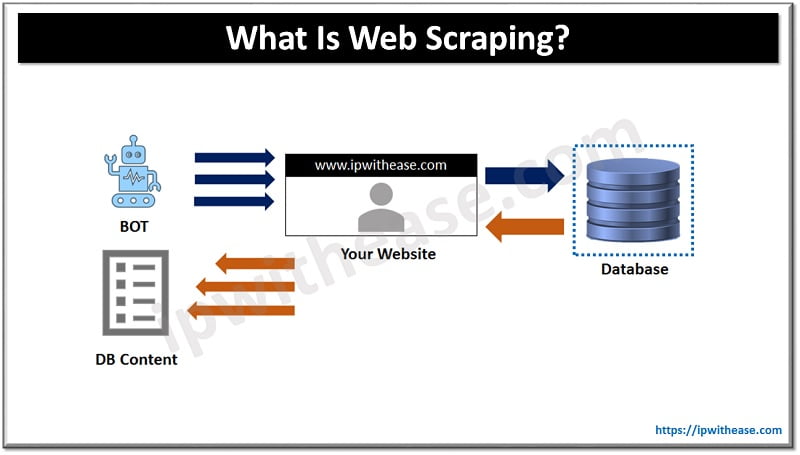

What Is Web Scraping?

Web scraping (or data scraping) is a technique used to collect content and data from the internet. It enables you to extract any information (for example, product listings or stock market data) in bulk and have the results returned in a structured file format, like XML or JSON. This method enables developers, data scientists, and businesses to gather large amounts of data efficiently.

Challenges in Web Scraping

During web scraping, you’ll likely face a few challenges, such as:

- IP blocking, when websites detect too many requests from the same address

- CAPTCHAs that distinguish bots from humans

- Geo-restrictions that limit content based on location

And many more.

Overcoming these requires strategic tools like proxies, VPNs, or scraping APIs, which allow your bots to reach the target site without getting blocked.

Related: What is Web Scraping?

VPNs: When and Why to Use Them in Web Scraping

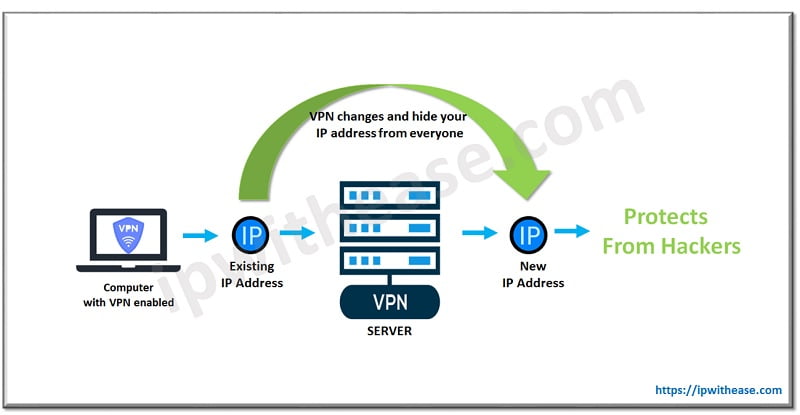

A Virtual Private Network (VPN) is a service that secures your internet connection by encrypting it and routing it through a server in your chosen location. It allows you to access and transmit data as if connected to a private network.

VPNs work at the operating system level, rerouting all traffic from a browser, an app, etc. They encrypt the data between your device and the Internet, which prevents even your Internet Service Provider (ISP) from seeing your online activities. It also masks your IP, making it appear like you are accessing the Internet from the VPN server’s location.

However, most VPNs come with performance limitations that affect scalability. Since VPNs typically offer limited IP rotation capabilities, they are unsuitable for high-volume scraping operations.

VPNs are best suited for:

- Small-scale scraping projects

- Cases where data privacy is the primary concern

- Situations requiring access to geo-restricted content

- Projects with minimal requirements for IP rotation

Proxies: The Backbone of Scalable Scraping

A proxy acts as a middleman between your device and the destination site. When you use a proxy, your internet requests are directed through a proxy server, which then communicates with the website on your behalf.

Proxy servers are mainly used for security. The level of anonymity varies depending on the proxy type. For instance, residential and ISP proxies provide greater anonymity than datacenter proxies.

However, unlike a VPN, a proxy doesn’t encrypt your internet traffic. While it can change your IP address and hide your location, it doesn’t provide the same level of security as a VPN. However, this doesn’t mean one is better than the other. Each has its advantages and use cases, which we’ll explore in the subsequent sections.

Types of Proxies

There are many different types of proxy servers used to scrape information from the web, and each has different properties. Here are some of the most common ones used for web scraping:

Datacenter Proxy Servers

These are proxy servers housed in a data center. They are more affordable and are used by people who need to scour the web for information. Datacenter proxies replace the user’s original IP address with one temporarily leased from a data center. They also offer high speeds but are more easily identified and blocked by target sites.

To avoid getting blocked, datacenter proxies are commonly used with rotating proxies, further improving the chances of successful scraping.

Rotating Proxy Servers

When scraping a website for information, you may need to access it multiple times. However, the website’s security protocols may consider these multiple requests from one IP address suspicious activity.

Therefore, web scrapers use rotating proxy servers, where the IP addresses are automatically and randomly rotated so that the requests seem to come from different addresses. Rotating proxies are used in conjunction with datacenter and residential proxies to distribute the overall traffic into multiple sessions, making it harder for bot-blockers to identify automated scraping.

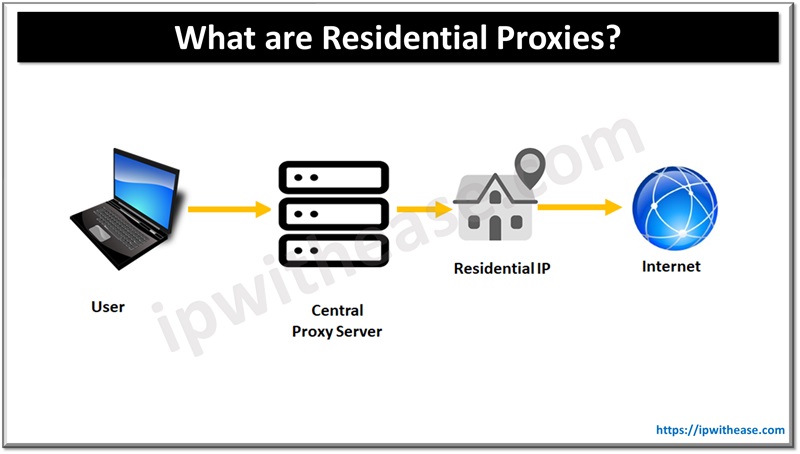

Residential Proxy Servers

These proxy servers are located at a specific location, on a specific device. So, a request coming from a residential proxy server seems to come from a regular user and may pass security. In addition, using several residential proxies and rotating the requests between them can help overcome being banned from a website. Overall, residential proxies are used by people who want to overcome country or location bans.

For example, a user in India may not be allowed to access a website in the US. However, using a US-based residential proxy server, the user’s computer from India can access content meant only for US audiences.

Of course, residential proxies are harder to detect because they are connected to an ISP, making them the best option for scraping highly protected sites using bot-blockers like DataDome and PerimeterX.

Best Practices for Managing Large-Scale Scraping

- IP Rotation: Regularly change your IP addresses to mimic organic human traffic by distributing your request across multiple sessions.

- Session Management: Each proxy should maintain its own session with appropriate cookies and headers.

- Handling Rate Limits: Adjust your scraper’s request rates to stay below the target site’s rate limits to prevent the website from being overwhelmed.

- Rotating User-Agents: Change the User-Agent header to simulate requests from different browsers and devices.

- Bypassing CAPTCHAs: Incorporate CAPTCHA-solving services into your scrapers to handle any CAPTCHA verifications.

Overall, a web scraper uses a proxy server to suit the kind of task at hand. However, while proxies can be helpful for web scraping, some people prefer to use scraping APIs.

APIs: An All-in-One Scraping Solution for Efficiency

Scraping APIs provide a comprehensive solution to proxies and VPNs by abstracting the complexities involved in managing these tools. These services handle tasks like IP rotation, proxy management, CAPTCHA solving, and more, allowing you to focus solely on data extraction.

What Are Scraping APIs?

A scraping API is a service (either free or paid) that enables you to access website data without worrying about underlying security measures. By making simple API calls, you can retrieve information from websites without dealing with technical challenges like proxies or CAPTCHAs.

Features of Scraping APIs

- Built-In Proxy Management: Automatically handle IP rotation and provide a vast pool of proxies.

- CAPTCHA Solving: Integrated mechanisms to bypass or solve CAPTCHAs.

- Geo-Targeting: Access region-specific content without manual proxy configuration.

Detailed Comparison: VPN vs Proxies vs Scraping APIs

Understanding the technical differences between VPNs, proxies, and APIs is an important step when choosing the right tool for your organization. Each option has its strengths and weaknesses in areas such as speed, scalability, and customization, so let’s quickly go over them:

Speed and Performance:

Because of the absence of data encryption and decryption, proxies usually offer faster performance than VPNs. Keep in mind that performance results change depending on the type of proxy and VPN under analysis. For example, a residential proxy might be slower than a premium VPN.

APIs, particularly those optimized for scraping, often offer the best performance due to their optimized infrastructure and intelligent request routing designed specifically for web scraping jobs.

VPNs, while providing excellent security, introduce additional latency due to the encryption and decryption processes. However, some high-end VPN services have made huge strides in bridging this performance gap.

Ease of Scaling Operations:

VPNs struggle with large-scale scraping due to limited IP rotation capabilities. While some VPN providers offer dedicated IP options, they often come at a higher cost and may not provide the same level of flexibility as proxy-based solutions.

Proxies offer better scalability through vast IP pools and rotation capabilities. However, scaling proxy-based operations requires careful management of rotation patterns, rate limiting, and error handling. The overhead of maintaining a reliable proxy infrastructure increases proportionally with scale.

APIs offer the highest ease of scaling, as they abstract away the complexities associated with managing proxies and scaling infrastructure. By simply increasing your API usage, you can reliably scrape more data without significant additional development effort or maintenance overhead.

Customization and Defense Handling:

While VPNs are excellent for general privacy purposes, they barely offer customization options for web scraping. Their primary focus is on encrypting all traffic at the network level but does not allow granular control over request headers, user-agent strings, or session handling, which doesn’t align with the requirements of web scraping.

Proxies are more flexible than VPNs, they enable you to customize request headers, manage cookies, and control how and when IP addresses are rotated. This level of control is essential for mimicking human browsing behavior and bypassing basic anti-scraping measures. You can also choose between residential, mobile, datacenter, or rotating proxies based on your specific needs.

Scraping APIs, deliver the highest level of customization and flexibility. They offer a wide array of parameters to tailor your requests, such as rendering JavaScript content, setting custom headers, specifying geolocations, and managing sessions. Additionally, they automatically handle CAPTCHAs and other sophisticated anti-bot defenses.

Resource Requirements and Maintenance:

VPNs require minimal maintenance. While they don’t require the constant monitoring of individual IPs, they still need periodic checks to ensure connection stability and optimal server selection. Still, managing multiple VPN connections can also become cumbersome and costly over time.

Managing proxies demands considerable effort. You need to source reliable proxies, monitor their performance, handle rotations, and replace them as they become ineffective or get banned. This ongoing maintenance can easily consume a lot of time and resources.

Scraping APIs typically have the lowest resource overhead. They manage proxy sourcing, IP rotation, CAPTCHA solving, and error handling. This allows you to allocate your resources toward data analysis and other strategic tasks rather than operational maintenance, helping you save time and money in the long run.

Cost Considerations:

Proxies are available both for free and at various price points. Many providers offer attractive deals through on-demand and subscription models to support web scraping projects that require numerous IP addresses.

VPNs tend to be more expensive because they often include additional features like general web protection, password management, and ad-blocking capabilities. However, these features are not typically useful for data scraping, which means you may end up paying more without significant benefits.

Note: We do not recommend using free proxies or VPNs for web scraping. Doing so will increase the risk of getting your bots blocked and, potentially, your IP banned if there’s a leak at some point in the process.

APIs offer scalable pricing plans that align with your usage and needs. They provide cost-effective solutions, which can save money in the long run by reducing the need for additional resources and maintenance.

How About Combining Tools?

Sometimes, combining tools can provide the best results. For example, using a VPN with proxies or a scraping API can provide an additional layer of anonymity and security. This can be important, especially when dealing with sensitive data or operating in regions with strict internet regulations.

Similarly, integrating your own proxies with a scraping API can offer the best of both worlds. While the API handles complex tasks like CAPTCHA solving and JavaScript rendering, your dedicated proxies can address specific requirements such as compliance with data residency laws or accessing highly restricted content.

The decision to combine tools should be based on a thorough assessment of your project’s needs, the target website’s defenses, and any legal or ethical considerations.

Use Case Matrix

To summarize, here’s a table matching common requirements with the best solutions:

| Requirement | Best Solution | Notes |

| Small-scale scraping | Proxies or API | Easy setup with minimal cost and complexity |

| High-volume data extraction | Proxies or API | Scalable solutions with automated IP rotation |

| Complex websites with anti-bot measures | API | Handles CAPTCHAs and JavaScript rendering automatically |

| Budget-conscious projects | Datacenter Proxies | Cost-effective but higher risk of detection |

| Sensitive data / Enhanced privacy | VPN | Encrypts traffic and masks origin IP |

| Minimal maintenance | API | Provider manages infrastructure and anti-bot measures |

| Maximum control | Self-managed Proxies | Full control over proxy management and configurations |

| Geo-targeting requirements | Residential Proxies or API | Access region-specific content |

Conclusion

Choosing the right tool for web scraping depends on your specific needs:

- VPNs: Suitable for small-scale scraping where you need anonymity but don’t require a large pool of IP addresses.

- Proxies: Ideal for projects that need scalability and more control over IP management, allowing for higher request volumes.

- APIs: Best for high-volume, complex scraping tasks where ease of use and efficiency are top priorities. API tools handle proxy rotation and anti-bot measures for you, saving time and effort.

Managing proxies and dealing with anti-scraping measures can become time-consuming and interrupt your data collection flow. The best solution is to use a tool that automates proxy rotation so you can get the data you need quickly and at a large scale.

ABOUT THE AUTHOR

IPwithease is aimed at sharing knowledge across varied domains like Network, Security, Virtualization, Software, Wireless, etc.