Table of Contents

Machine learning is a subset of artificial intelligence (AI) that focuses on developing algorithms and statistical models that enable computers to learn from and make predictions or decisions based on data, without being explicitly programmed. In machine learning, models are trained using data, and as they process more information, they improve their ability to perform specific tasks or recognize patterns.

List of Top Machine Learning Interview Q&A

Read or watch the video

Q.1 What is the difference between supervised and unsupervised learning?

Supervised learning uses labeled data to train models, where the input-output relationship is explicitly provided. Unsupervised learning works with unlabeled data, aiming to identify hidden patterns or groupings within the dataset. Examples include clustering and dimensionality reduction.

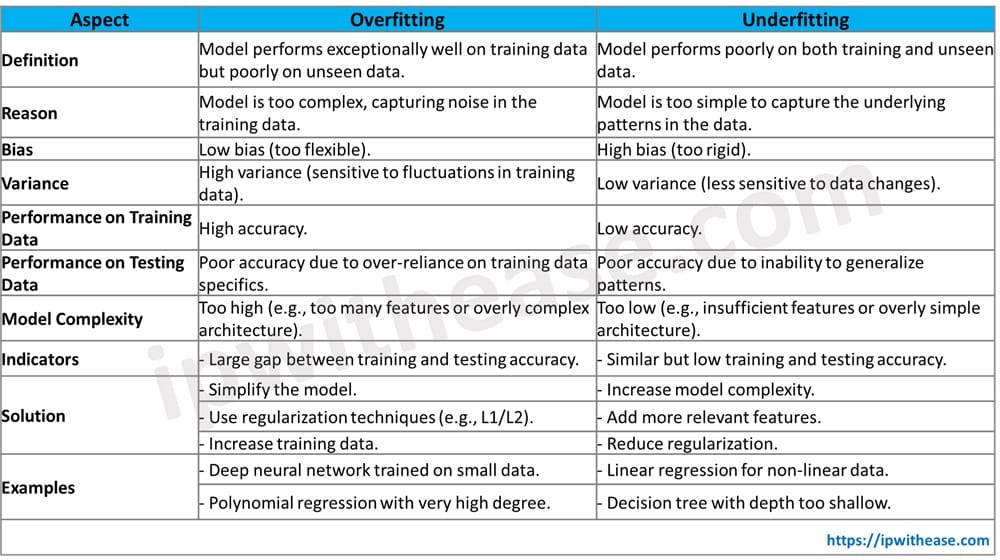

Q.2 Explain the bias-variance tradeoff.

The bias-variance tradeoff refers to the balance between two sources of error in a machine learning model:

- Bias: The error due to overly simplistic assumptions in the model, leading to underfitting and poor performance on both training and test data.

- Variance: The error due to excessive complexity in the model, causing it to overfit the training data and perform poorly on new, unseen data.

The tradeoff lies in finding the optimal model complexity that minimizes both bias and variance to achieve the best generalization to new data.

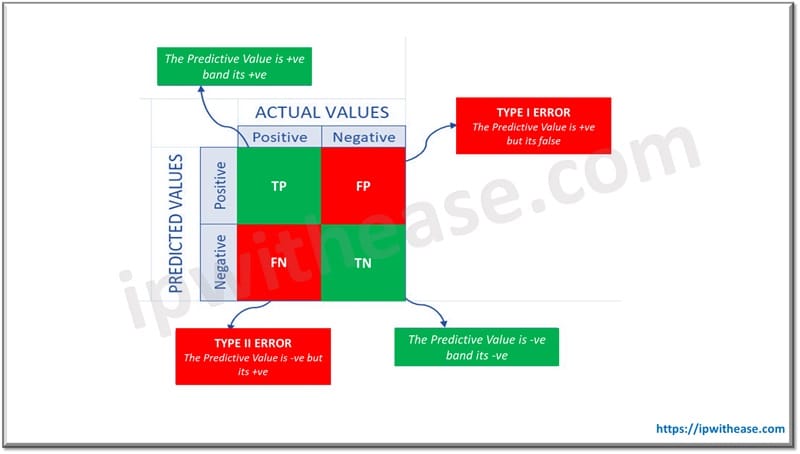

Q.3 What is a confusion matrix? Can you explain its components?

A confusion matrix is a table used to evaluate the performance of a classification model by comparing the predicted labels to the actual labels. The components are:

- True Positive (TP): Correctly predicted positive observations.

- True Negative (TN): Correctly predicted negative observations.

- False Positive (FP): Incorrectly predicted positive observations.

- False Negative (FN): Incorrectly predicted negative observations.

Q.4 How do you handle missing data in a dataset?

Missing data can be handled in many ways:

- Remove rows or columns with missing values.

- Impute missing values using statistical methods (mean, median, mode) or predictive modelling.

- Use algorithms that can handle missing data intrinsically.

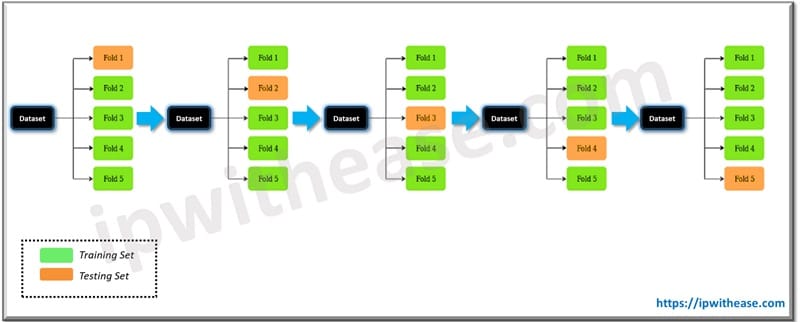

Q.5 What is cross-validation? What is its importance?

Cross-validation is a technique for evaluating machine learning models by training multiple models on different subsets of the data and assessing their performance. It helps ensure that the model generalizes well to unseen data. The most common type is k-fold cross-validation, where the dataset is divided into k subsets, and the model is trained and tested k times, each time using a different subset as the test set.

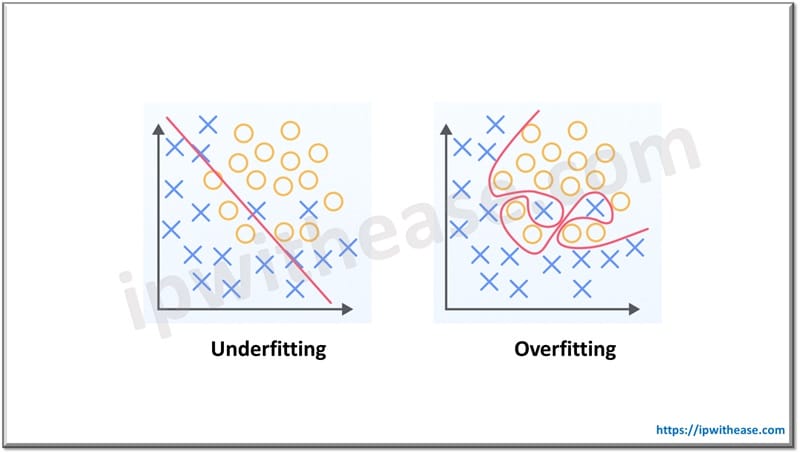

Q.6 Explain the concept of overfitting and underfitting in machine learning.

In machine learning, overfitting occurs when a model is too complex and captures noise or irrelevant details from the training data, leading to poor generalization on new data. Underfitting happens when a model is too simple and fails to capture the underlying patterns in the data, resulting in poor performance on both training and test sets. Achieving the right balance is key for optimal model performance.

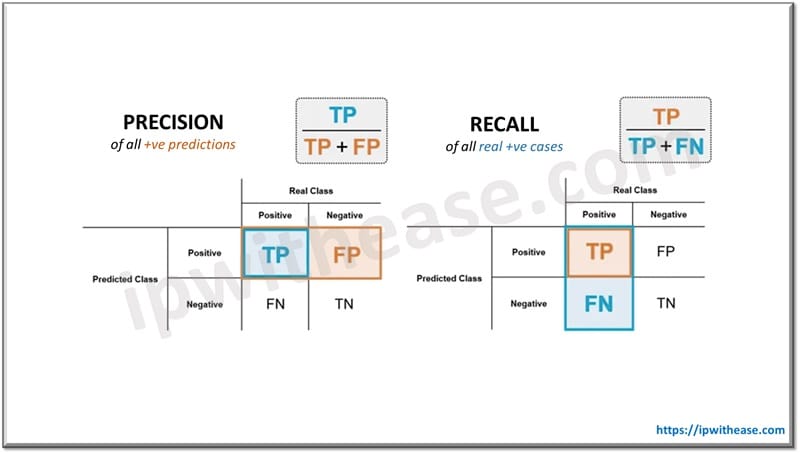

Q.7 What are precision and recall? How are they related to the F1 score?

- Precision is the ratio of correctly predicted positive observations to the total predicted positives (TP / (TP + FP)).

- Recall is the ratio of correctly predicted positive observations to all actual positives (TP / (TP + FN)).

- F1 Score is the harmonic mean of precision and recall (2 * (Precision * Recall) / (Precision + Recall)). It balances the tradeoff between precision and recall.

Related: Generative AI vs ChatGPT

Q.8 What is regularization in machine learning?

Regularization in machine learning is a technique used to prevent overfitting by adding a penalty term to the loss function. This penalty discourages the model from assigning excessively large weights to features, thereby simplifying the model and improving its ability to generalize to unseen data. Common types of regularization include L1 (Lasso), which promotes sparsity by driving some weights to zero, and L2 (Ridge), which minimizes the magnitude of weights.

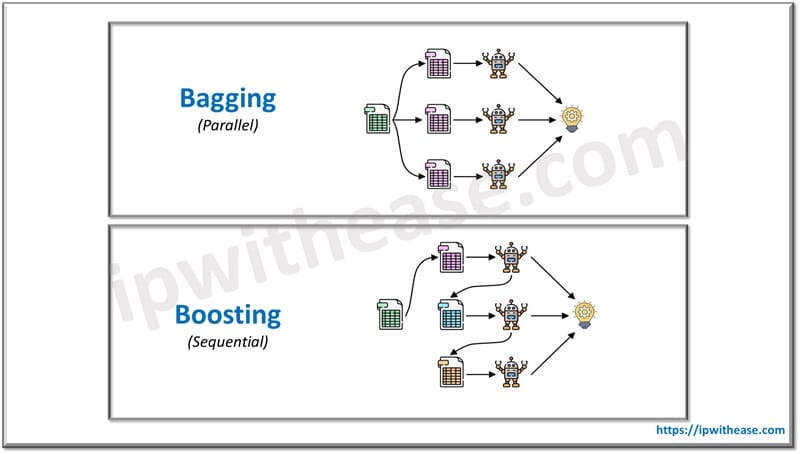

Q.9 Can you explain the difference between bagging and boosting?

- Bagging (Bootstrap Aggregating) is an ensemble technique that trains multiple models independently on different subsets of the data (obtained through bootstrap sampling) and combines their predictions by averaging (for regression) or voting (for classification). Example: Random Forest.

- Boosting is an ensemble technique that trains models sequentially, where each model tries to correct the errors of the previous one. The predictions are combined using a weighted sum. Examples: AdaBoost, Gradient Boosting.

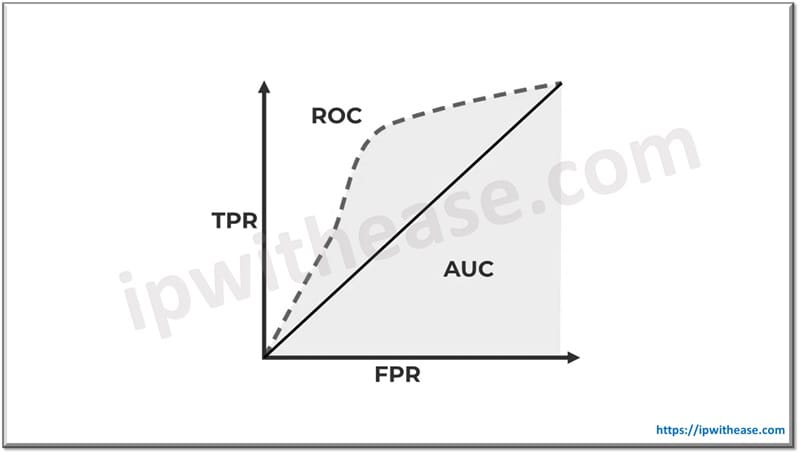

Q.10 What is the purpose of the ROC curve, and what does AUC represent?

The ROC (Receiver Operating Characteristic) curve is a graphical representation of a classifier’s performance, plotting the true positive rate (TPR) against the false positive rate (FPR) at various threshold settings. The AUC (Area Under the Curve) represents the overall ability of the model to discriminate between positive and negative classes. An AUC of 1 indicates a perfect model, while an AUC of 0.5 indicates a model with no discriminatory power.

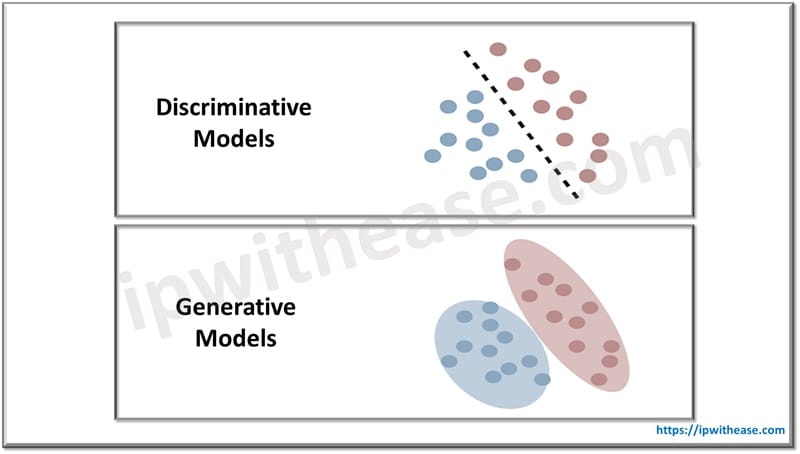

Q.11 What is the difference between a generative and a discriminative model?

- Generative models learn the joint probability distribution of the input features and the target labels, allowing them to generate new data points. Examples include Gaussian Naive Bayes and Hidden Markov Models.

- Discriminative models learn the conditional probability distribution of the target labels given the input features, focusing on the decision boundary between classes. Examples include Logistic Regression and Support Vector Machines.

Q.12 Explain the difference between parametric and non-parametric models.

- Parametric models have a fixed number of parameters, and their complexity does not change with the amount of training data. Examples include Linear Regression and Logistic Regression.

- Non-parametric models have a flexible number of parameters, which can grow with the amount of training data. Examples include k-Nearest Neighbours and Decision Trees.

Q.13 What is the curse of dimensionality, and how can it be addressed?

The curse of dimensionality refers to the challenges and difficulties that arise when working with high-dimensional data, such as increased computational cost, risk of overfitting, and sparsity of data points. It can be addressed by dimensionality reduction techniques like PCA (Principal Component Analysis) or t-SNE (t-Distributed Stochastic Neighbour Embedding), and by feature selection methods.

Q.14 What is gradient descent, and how does it work?

Gradient descent is an optimization algorithm used to minimize the loss function of a model. It works by iteratively adjusting the model’s parameters in the direction of the negative gradient of the loss function with respect to the parameters. The step size is determined by the learning rate. Variants include batch gradient descent, stochastic gradient descent, and mini-batch gradient descent.

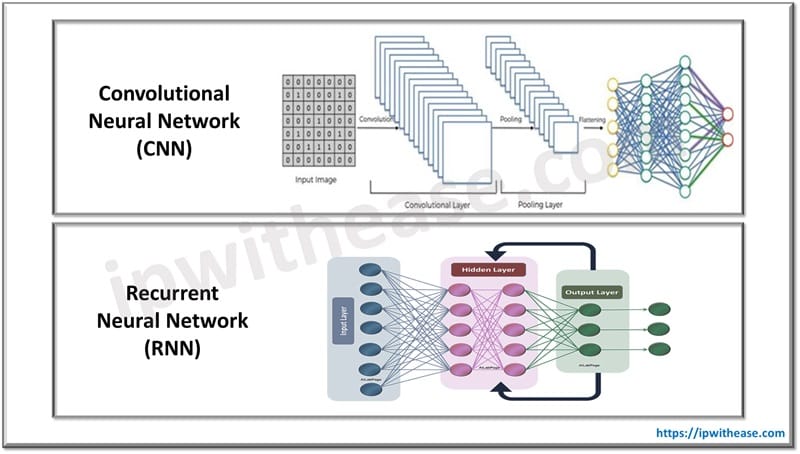

Q.15 What is the difference between a convolutional neural network (CNN) and a recurrent neural network (RNN)?

- CNNs are designed for processing grid-like data such as images. They use convolutional layers to automatically detect spatial hierarchies of features and are widely used in image recognition tasks.

- RNNs are designed for sequential data and can capture temporal dependencies. They use recurrent layers where the output from one step is fed as input to the next step. RNNs are widely used in tasks like time series prediction and natural language processing.

Q.16 Explain the concept of transfer learning in machine learning.

Transfer learning involves leveraging a pre-trained model on a large dataset and fine-tuning it on a smaller, task-specific dataset. This approach can significantly reduce training time and improve performance, especially when the target task has limited data. It’s commonly used in deep learning applications like image classification and natural language processing.

Q.17 What is the role of activation functions in neural networks?

Activation functions introduce non-linearity into the neural network, allowing it to learn complex patterns in the data. Common activation functions include:

- Sigmoid: S-shaped curve, outputs values between 0 and 1.

- Tanh: Outputs values between -1 and 1.

- ReLU (Rectified Linear Unit): Outputs the input directly if it is positive, otherwise, it outputs zero.

- Leaky ReLU: Similar to ReLU but allows a small, non-zero gradient when the input is negative.

Q.18 What is a hyperparameter, and how is it different from a parameter?

- Parameters are internal to the model and are learned from the training data, such as weights in a neural network.

- Hyperparameters are external to the model and set before the training process, such as learning rate, number of hidden layers, and batch size. They control the training process and model architecture.

Q.19 Explain the concept of feature engineering.

Feature engineering is the process of using domain knowledge to create new features or modify existing ones to improve the performance of a machine learning model. It involves techniques like normalization, encoding categorical variables, creating interaction terms, and deriving new variables from existing ones.

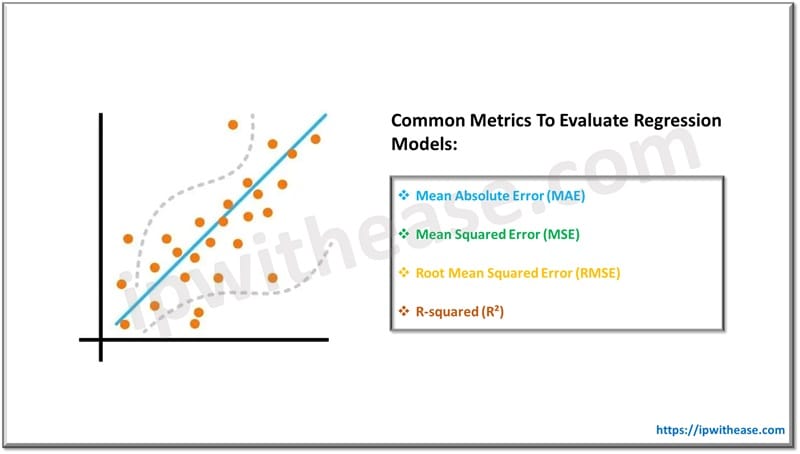

Q.20 What are some common metrics used to evaluate regression models?

Common metrics for evaluating regression models include:

- Mean Absolute Error (MAE): The average absolute difference between predicted and actual values.

- Mean Squared Error (MSE): The average squared difference between predicted and actual values.

- Root Mean Squared Error (RMSE): The square root of MSE.

- R-squared (R²): The proportion of variance in the target variable explained by the model.

Watch Related Video:

ABOUT THE AUTHOR

You can learn more about her on her linkedin profile – Rashmi Bhardwaj