Table of Contents

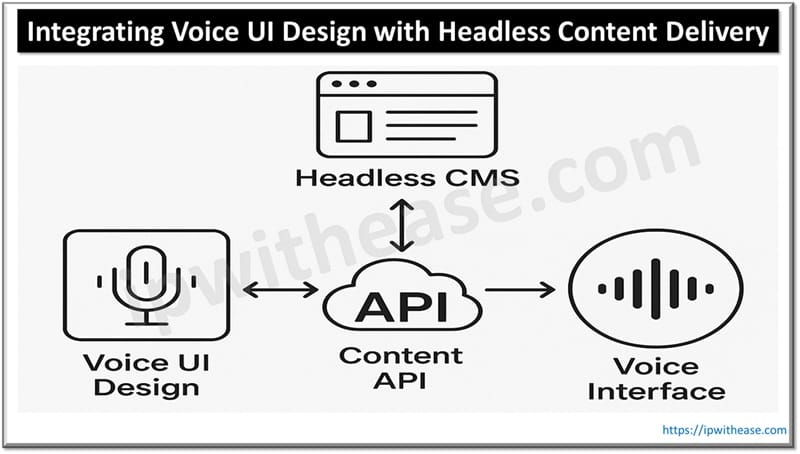

With the rise of voice user interfaces in applications like smart speakers, mobile assistants and car interfaces, information architects and content strategists/content developers will need to reimagine how information is presented and structured. It’s not as simple as taking already-created, screen rendered content and converting it to a voice interface. Instead, voice considerations necessitate clear attention to meaning and context relative to the dialogue. Therefore, as voice UI (VUI) intertwined with headless content delivery gives massive potential to render modular, structured content across platforms and in real time, the combination of voice UI design and a headless CMS will give organizations the consistency, flexibility and scalability they need to thrive in a voice-first world.

From where does the expectation of voice-first experiences come?

Voice-first interfaces foster expectations of how people should find information and experience it. People should be able to learn in a hands-free, conversational manner from the kitchen asking for the news of the day to the car inquiring about a meeting time to a voice in the other room asking a person if they’d like to continue with the smart device they’re calling up. Voice UI design accommodates these needs through sonic representation, tone, timing, and inflection determine understanding and compliance; where a visual interface offers all access at one time, voice is linear and temporal.

This means that content must be considered bite-sized, able to serve multiple purposes, and remain repurpose able in multiple instances. Understanding the distinction between headless CMS vs traditional CMS becomes critical here, as only a headless approach enables the flexible, structured content delivery required to support dynamic, voice-first experiences across devices and scenarios.

Why is structured content important for voice?

There can be no voice experience without structured content. Organizations can utilize a headless CMS to produce content in fields that can be mutually exclusive titles, summaries, facts and answers can all be queried independently. Thus, voice experience is generated only with what is needed in the moment. For example, if someone asks Alexa for “weather today,” an API can access only that field associated with the weather description and not a non-sequitur summary about tomorrow. When content is unstructured, however, there is no way to prevent off-topic results.

How can a Headless Content Management System enable conversational flows?

Voice is not just about answering questions. It’s about helping people get from point A to B naturally, helping to shape experience. Through a headless CMS, fueled by various reusable blocks of content, designers and developers can script conversations based on intent. This includes guiding people through initial thoughts, follow-up questions and confirmation. For example, an airline can use its CMS for confirming prompts during the booking process with questions like “When are you flying?” “Would you like to know if there are seats available?” Structure content enables these blocks to exist across devices, purchases and ease of updates.

Supporting Omnichannel Consistency Across Voice and Screen

Perhaps one of the most advantageous aspects of introducing a voice UI alongside headless delivery of content is the omnichannel approach to messaging. A user could engage with the brand via the voice-assistant option on their mobile device, retrieve the same information from the brand website, or engage with a smart display; regardless of how users interface, the experience is supported by structured content deployment from the same single source of truth.

This also aids in the overall content management process, reducing the risk that disparate channels could fall out of sync. If a company updates its privacy policy or product description, a headless solution allows for it to be changed for voice and screen simultaneously in real-time fostering a cohesive company image and an air of confidence with brands who trust their product descriptions no matter how consumers interact with content.

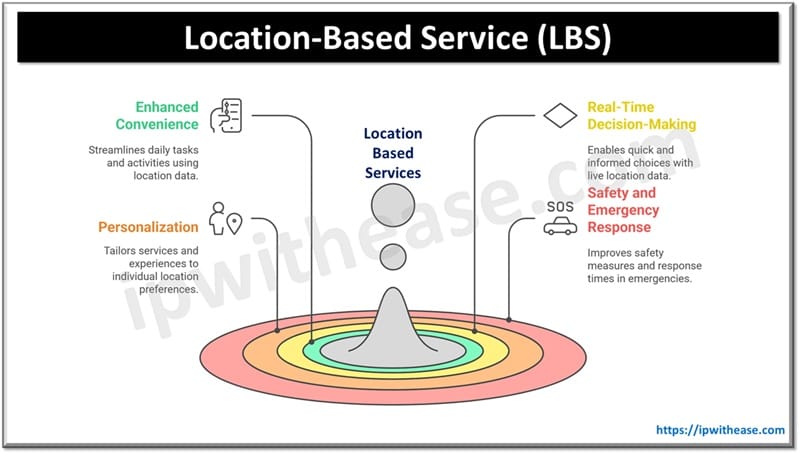

Creating Context-Specific Answers Based on Metadata and Taxonomy

Metadata and taxonomy are what exist within headless systems to make voice technology contextual. Content can be tagged through a headless CMS not only to identify who the audience might be (age range, interests) but also geographical implications, device abilities or intentions. Such tags can help a voice technology’s logic better understand contextually relevant responses. For instance, a franchise restaurant might have a voice application that includes metadata for location so that when users inquire about hours and menus, they receive information pertinent to their assigned franchise whether it’s in Dallas or Denver. This makes accurate responses more effective and reduces user frustration in information gathering.

Connecting Voice Platforms Directly with CMS APIs

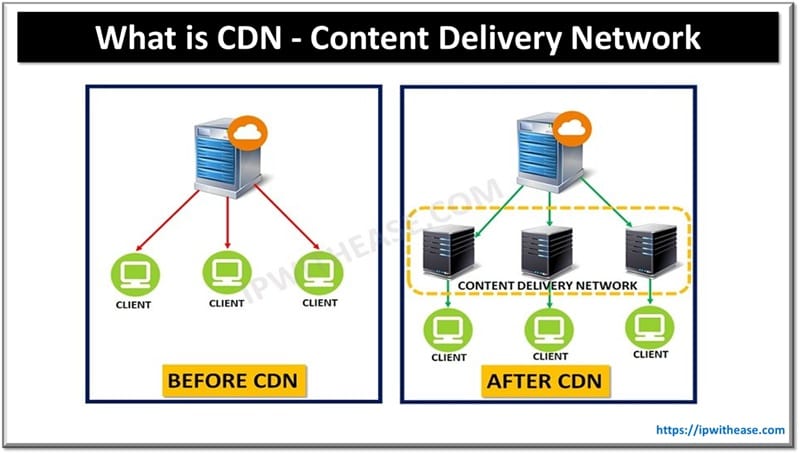

Voice applications rely almost entirely on information found in the backend to provide usable content. Luckily for headless solutions, they are API-first; this means that the very framework of a headless CMS is built around APIs for content management. Developers might integrate these platforms not only with Amazon Alexa and Google Assistant but also proprietary voice SDKs with content APIs from the CMS when developing solutions for voice applications.

This not only assures effective content communication to the voice interface, it empowers validation for user requests, prompts actions and activates automated efforts. In addition, CMS APIs can provide for customized requests which enable specific content to be rendered as opposed to unnecessary loading and prevents lag time for on-demand voice implementations.

Team Collaboration for Voice-Ready Content

Successful voice interactions depend on a variety of team types, including but not limited to content strategists, VUI designers, developers and UX writers. A headless CMS promotes such teamwork by enabling each team to stay in its lane while contributing to a larger picture of content. For example, as content creators compose and edit the potential responses in the CMS, developers can focus on integration logic and flow management. Such parallel experiences drive time to completion faster while also making testing, adjustments and edits easier to vet for voice experiences as user intent evolves over time.

Testing Voice Content in Real Time

Like any other digital experience, voice applications require testing and tuning on an ongoing basis. A headless CMS allows teams to update application content in real time without needing to recompile or redeploy the voice-driven experience. If a phrase needs to change or a hierarchy needs to be altered, teams can push changes quickly resulting in faster adjustments to voice flows based on user sentiment. Furthermore, headless CMS components trigger versioning, previews, and permissioned access which allow for more transparent, safer experimentation and subsequent fixation of changes which is particularly beneficial for agile development of voice UIs.

Promoting Inclusivity with Audio-First Approach

Voice interfaces are naturally inclusive and accessible. They offer screen-free and hands-free options for interaction although that doesn’t mean they’re always usable by everyone. Using a headless CMS supported by accessible, structured content helps creators ensure responses are brief, to the point and made with all potential users in mind. For example, structured content assists in easier insertion into assistive technology; developers can derive voice output based on needs, cognitive challenges, language-based issues, etc. Additionally, accessibility considerations can be included in the content models themselves so editors have requirements with purpose when creating for voice delivery.

Future-Proof Voice Strategies with Scalable Content Architecture

As voice evolves from basic commands to complex multi-turn interactions, scaling becomes an important factor. A headless CMS provides the scalable architecture needed for future voice developments. Whether that means integrations with more advanced AI-driven assistants to expanding to more languages or offering support for multimodal devices, structured content is the standard upon which uniformity and growth relies. By investing in modular content now, brands will be able to offer richer, more sophisticated and dynamic voice experiences down the line.

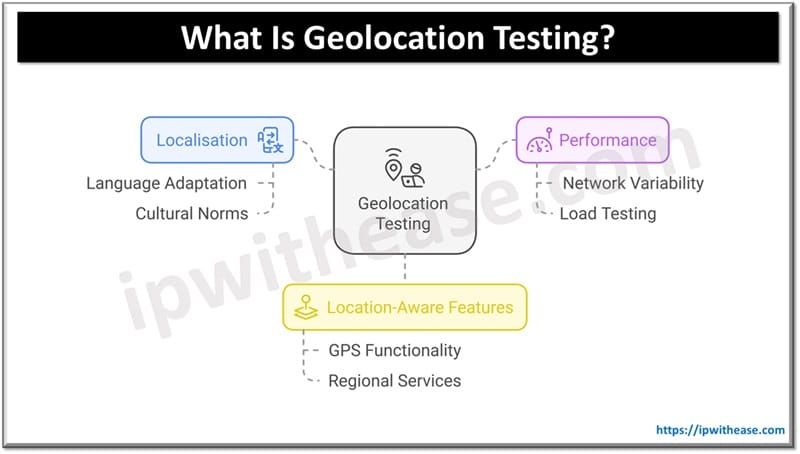

Supporting Multi-Language Voice Content for Global Users

When voice is an interface utilized by a worldwide audience, it has to talk to global users, too literally. Offering voice content in different languages, dialects and cultural considerations is a necessity. A headless CMS champions this effort by supporting multiple language fields on one content entry. For instance, a voice UI can be activated to access the content based on geolocation and device preferences. At the same time, however, global users are met with correct answers in their own native language or dialect, sounding authentic with proper articulation which drives usability and loyalty.

Formatting FAQs and Knowledge Bases for Voice Recognition

Voice interfaces are particularly effective for frequently asked questions and knowledge base content. Users ask questions in a natural way “How can I change my password?” or “What’s your return policy?” and expect short, accurate answers in return. With a headless CMS, teams can structure their responses as individual Q&A pairs with topical associations and intent-based categorizations. These can be activated in an instant by the voice interface and provide precise answers with no unnecessary explanations or menu digging.

Analyze to Improve Effectiveness of Voice Content

The best way to know how people engage with a voice interface to create the most effective voice content is to analyze. When analytics features are baked into the CMS as well as the voice output system, for instance, teams can determine what questions are searched most, where people drop off in a voice experience and how often fallback options are selected. Analytics reveal what content needs to be refreshed, where there are holes in the voice experience and what can be improved editorially and prioritized. By analyzing at regular intervals, teams can ensure their voice experience grows as their audience grows.

Access to Supplementary Audio and Visual Content When Appropriate

Voice-first does not mean voice-only. Devices such as Echo Show or Google Nest Hub allow for multimodal experiences where voice might include an accompanying visual that adds emphasis to the interaction. A headless CMS supports this by enabling teams to create both the voice experience and the related images and text that could accompany it, in addition to any interactive components, all from one unified source repository. This means that a voice recipe could not only be read but also show images of the final product. Similarly, turn by turn directions could come with a visual map to keep the user engaged in a multi-sensory experience.

Conclusion

Therefore, the integration of voice UI design with headless content delivery is both forward-thinking and required for a generation of users who will interact with systems across voice-first avenues. From smart speakers to vehicles to headless wearables, the demand for content access in real-time is rising in as timely, contextualized, and subverted ways as possible. Where visual-first avenues flourish through design, styling, and traditional delivery methods, the possibility of reformatting such content for a voice-first experience may fail; yet one that envisions content with a voice-first experience in mind like a headless architecture will only prosper.

With standards-based content modeling, API-first delivery, and collaborative systems that encourage real-time engagement, companies can position their content for voice long before the transformation actually occurs. Within a voice UI universe, structured-defined content isn’t an asset to work around design but the foundation of the conversation. Assets are able to be categorized, tagged, and contextualized for voice derivatives so a system can query with content based on intent, question, or discovery patterns not merely as static boxes in a content template.

A modularized construction allows voice interfaces to retrieve only relevant shorts of content when needed, without lag, increasing response time. Furthermore, should the foundation infrastructure allow for this voice-ready construction, it also opens the door to more customized and localized responses, enabling companies to generate more organic responses to a broader audience.

As people increasingly rely on voice engagement as their primary method for research, task completion, and organic interaction with companies that strive to understand them better, those ahead of the curve will be easier to find. These champions won’t just answer questions with the necessary content construction but they’ll leverage consistent communications that come across as timely and relevant for every human engagement. In a space where 24-hour on-demand, hands-free interactions are expected, merging voice UI with headless content delivery won’t just make sense but it’ll be essential for strategic advancement.

ABOUT THE AUTHOR

IPwithease is aimed at sharing knowledge across varied domains like Network, Security, Virtualization, Software, Wireless, etc.