Table of Contents

What is Server Load Balancing?

Server Load Balancing is a mechanism to distribute workload by providing availability and performance to software applications running across multiple servers. The objective is to offload the application load from single server and distribute among multiple physical servers via load balancing mechanism, which leads to improved application performance, smooth end user experience and scalable to handle increased requests.

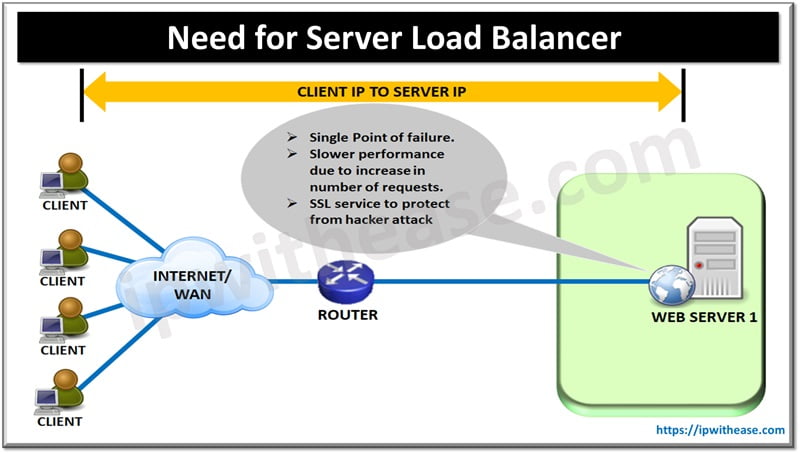

Need for Server Load Balancer

- A single Web Server will be Single Point of failure with no backup Physical Server to provide redundancy during event of primary server failure.

- Single Server gets overloaded with Client requests which leads to slower processing and slow end user response to application.

- To protect the client confidential data from theft by hackers, SSL services need to installed on the web Servers, further overloading the web server an overhead which further reduces processing speed and hence application performance.

To handle the above described conditions of non-availability, slow application performance and security from attacks, A Server Load Balancer is the right solution.

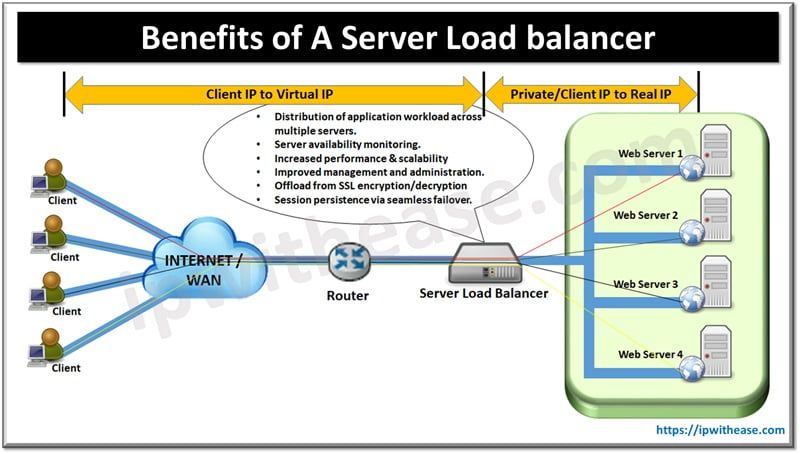

Benefits of A Server Load balancer

- Distributes application workload across multiple servers

- Server and application level Health Monitoring

- Increases application performance and Scalability

- Improves the management and administration

- Offloads the web server of encrypting/decrypting SSL traffic.

- Maintenance of session persistence in application which manage client state on the server side.

- Increases scalability by allowing new virtual and/or physical server addition transparently without disruption.

How does a Server Load Balancer work?

Server Load Balancer works on the model of Virtual Servers and Real Servers. Virtual Servers have the IP address that is revealed to the clients over Internet or WAN locations.

The Client sends the request to this Virtual IP address while hiding the real Servers. Real Servers are the application servers. One Virtual Server IP address on SLB is bound to TCP/UDP ports on multiple real Servers supporting the application. The client initiates the request for application on TCP/UDP port of the Virtual IP on the SLB.

The SLB in response initiates the request to one of real Server (for that application) based on the configured algorithm across multiple servers. In its working, the SLB monitors the health of all the real servers and stops sending new requests to servers which are not available.

Once the real server is available, SLB again includes real server in the list for accepting new connection requests. SLB maps one logical (virtual) server connection to multiple physical (real) servers. This allows a single IP address (virtual server IP address) can serve as the connection point for multiple TCP/UDP services such as HTTP, FTP or Telnet rather than each of the services requiring a different IP address for each service. These services can be located on a single server or across multiple servers.

Methods of Server Load Balancing

Server Load Balancing can be performed broadly in 2 ways: Hardware Based & Software Based

1.Hardware Load Balancing

Hardware Load Balancers can serve various servers n cluster. This type of Load Balancers typically have

- Robust topology

- Provide high availability

- Higher cost

Pros:

Uses Circuit level network Gateway for traffic routing

Cons:

Costlier than Soft Load balancing solutions

2. Software Load Balancing

Software based load balancers are commonly used by customers and usually come integrated with software/application pack.

Pros:

Cheaper than hardware load balancers. More customizable with intelligent routing based on input parameters.

Cons:

Additional server hardware needs to be procured when requirement is to have physically separate Server Load Balancing platform.

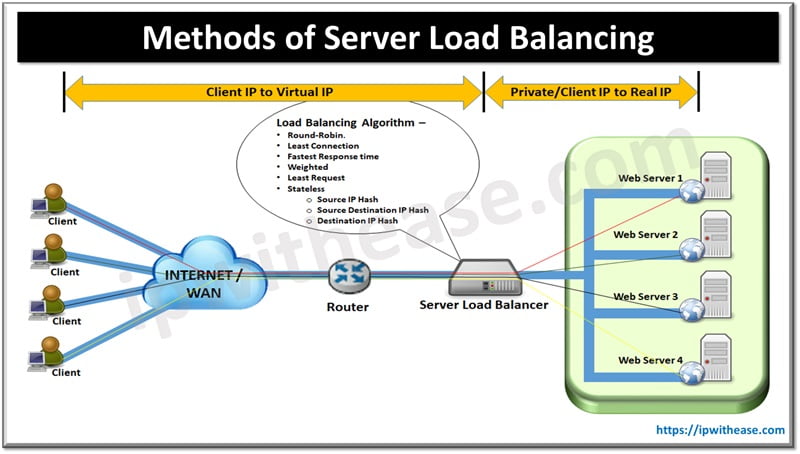

As a generalization, below are the major load balancing algorithms that all the OEM SLBs perform –

- Round-Robin– Simple Rotation with each request distributed one by one across each of real Servers in a sequence irrespective of weightage.

- Least Connection – Selects the server that currently has the minimum connections.

- Fastest Response time – Selects the server with the fastest SYN-ACK response time.

- Weighted –Selected the real Server IP based on administratively assigned weights. In event of a tie, Round Robin or Least connection may also be selected based on customized criteria.

- Least Request – Selects the real server port (for HTTP traffic) with fewest requests.

- Stateless – The selection criteria does a Hashing of ports on Source/Source-Destination/Destination.

Source IP Hash Source Destination IP Hash Destination IP Hash

Related Video

Continue Reading:

NETSTAT: TCP/UDP Active Connection Display Tool

Server Load Balancer: Deployment Models

ABOUT THE AUTHOR

You can learn more about her on her linkedin profile – Rashmi Bhardwaj